TL;DR: instead of burdening our artist(s) with generating a fixed set of image parts, we can put that burden on the programmer side, and the upside is we can improve all of face gen iteratively as new and better algorithms come out. For now, just look at the images. The basic idea is we (science community) now have the ability to generate (even photo realistic) images of faces from a random number, or even from a text string (e.g. "angry male middle aged face with sideburns"). This post describes, a bit from "ground up" recent advances on how machine learning can "understand" images.

Below is an example of generated images, i.e. given a random number it builds a fake face of a human that isn't real, like so:

This shows what is covered in the last section of this post, where you can generate a random face with a selected set of traits along different axes which they slide up/down in the gif above (alternative, gif as youtube video). These fake-humans look like fotomodels, because the algorithms have been trained on a data set of images of celebrities (image from pr-GAN [fn:1])

Introduction

You've probably heard a lot about machine learning - or "AI" as it's very often wrongfully refereed to. In this post I'll discuss some of the most "hot" advances, and with an inclination towards their potential for Pioneer, for 2D image creation, such as our infamous "face gen".

So first, when people talk about "AI", they almost always mean "machine learning", which is the new paradigm, where instead of explicitly programming the computer what to do, you let it detect pattern in data and "learn" from that. At its simplest it's glorified curve fitting. This is really taking off now because how well the output performs depends on the amount of input data. Thus all the "free" services by Google and Facebook: they want your data.

I though I start with basically a tour through the enormous groundbreaking progress (adjectives!) that has been made the most recent years, specifically when it comes to the tasks where humans previously were superior to machines, such as pattern recognition, and image identification and classification.

I also include lots of refrence-links, for completeness. (I explicitly make note of the ones I recommend following).

Artificial Neural networks (ANN)

This technique hark back to the 70s, but the low CPU power, and lack of data to train on made them not very useful. One major breakthrough was when US-postal service started using ANNs for reading addresses on envelopes in the 80s. Again, in the 90s they saw wide use for email classification to distinguish "spam" from "ham", based on the text, meaning the network gives probability of an email being "spam" or "ham", based on the occurrence of words like "I'm the Nigerian finance minister", or "special offer", etc.

However, classifying an image is far more arduous a task. If you teach an algorithm to associate a cat picture to belong to the "cat"-class, it should still consider it a "cat" if you move the image one pixel, or mirror it.

Image classification (CNN), post Anno 2012

What has really gotten the field of ANN to explode is the arrival of high performance "deep convolutional networks" (CNN). They have proven to be able to classify images with impressive accuracy. Specifically, there is a paper by Alex Krizhevsky (2012) [2] "AlexNet" that has been a real game changer, where a deep network was trained on 1,2 million images, labeled into 1000 different classes. After training (or "fitting" in old-lingo) (which took 5-6 days on two NVIDIA GTX 580) the network could classify new images with low error rate. When the network is shown new images it has never seen before, it should then estimate the top five most likely classes. (For anyone interested there is a video [3] that explains how this algorithm works, also a blog post [4].)

I think it's most impressive to see where - and how - the classification algorithm makes its errors. Below is figure 4 from the AlexNet paper. Top row shows correct (bold text) and classifications made (red bar); where we see its classifications: a cargo ship is a bit like a life boat and amphibian, or the leopard could also be a bit like a jaguar or cheetah. Bottom row shows misclassifications, and it's clear a human would have done the same mistakes: the picture of "cherries" sure looks like a dalmatian to me, and a bit "grapey" as well.

Key in doing this (and other) machine learning is to have data. A LOT of data. And this is why Google and all the other tech-giants are willing to provide you free storage, and encourage you to label your images (and friends). In the words of RMS: "If you're not paying for the product, you are the product!". These Internet giants have made their machine learning libraries freely available (e.g. Google's Tensorflow is released under Apache 2.0 open source license), however, the user data is what is the real key to their success. "Soylent green is people!"

For some ground up examples, and application to generating super mario levels / game design, please see medium blog post part 1 and part 2.

Generative adversarial networks (GAN)

GANs really exploded onto the scene a few years ago, with a couple of papers by Ian Goodfellow [5]. The simplified idea is you have two machine learning algorithms:

1. The "thief AI" gets a vector of random numbers, from which it tries to create a perfect forgery (e.g. a "dollar bill", or in our case: a human face)

2. The "police AI" takes an image as input, that's either the forgery from the "thief AI" in 1., or a real image (e.g. an image of a real human face), and it should try to distinguish if the image is real or fake.

So the optimization goal of both algorithms are "adversarial", and they co-evolve, thus training the "thief" to make very good forgeries, i.e. natural looking images from random numbers. The network does this by creating a "latent space" that encodes a meaning of what we want the output to be, so each point in this space will give different output images and moving in a path in latent space gives a smooth transitions between different prototypical dimensions of a face:

Figure 2b in Goodfellow (2014)

These images above have been generated by running a random vector (i.e. a coordinate in latent space) through a generator function, that returns a face (except right most column). Note: these faces don't belong to any real person, but are generated by the algorithm, from a random number. The right most column (column 6) are actual images of real humans that were the closest in latent space to the random images shown in column 5. They're included for comparison reason, to show that the algorithm hasn't just stored the trained real images* and returned them, but is actually learning the features of what makes up "a face" and encoded this in the latent space.

*(it's possible for a network to instead of encoding the underlying properties of faces, just store the real images its been trained on, (assuming the network is large enough to represent the raw training data). To make an analogous silly example to the field of image compression, where one uses the famous image of Lenna as a benchmark [6]: consider a compression algorithm that just checks if the image is Lenna (then compress input image to one bit, and return pre-stored image of Lenna when de-compressing) or not (then don't compress the image). Clearly that's "cheating", and something we want to avoid.)

The images are a bit fuzzy which has since then been addressed by other papers, read on for more, and I strongly recommend watching the amazing results in this short (6 min) youtube video, that generates images that look like celebrities.

Side note 1: Text to image generation(!)

There's a paper by Reed et. al (2016) [7], that implements text to image generation. Note: it's not text to image finding (e.g. google image search), but text to image generation. So the network doesn't only do natural language processing, but also image generation. There's a "Two minute paper" video on this article that is well worth watching for those who want to go deeper.

This image shows text to image generation, handling multiple objects and backgrounds (image is fig7 from the Reed paper [7]).

Side note 2: Super resolution

So we can go from a random number/vector to a new generated image (e.g. of a face), or from text to an image containing what the text describes. There's also been work to go from an existing low resolution image to a high resolution photo realistic one [8].

Image from paper, showing different algorithms increasing a low-resolution image from the right most, and the superior SRGAN algorithm

There's also work done on video prediction, i.e. add frames that are missing [9].

DCGAN (CNN + GAN!)

So what happens if you mix GANs, which are good at generating fake real-looking data (e.g. face images), with neural networks that do very well on "understanding" images, like convolutional neural networks (CNN). You get DCGAN (Deep convolutional GAN), as Radford (2015) [10] introduced, which is a very cool paper.

They show, (with images), that the latent space they find gives meaningful embedding, in that if you move around in it you will get continuous meaningful output, see image below, where they sampled 9 random points in latent space of a DCGAN trained on hotel room images, and then see how the output changes as you move around from these points, see image below:

The really cool stuff comes when they train the algorithm on faces. The latent space representation is so good you can do vector arithmetic on faces! Let me explain: The image below shows how taking the representation of "men with glasses" (a point in latent space) and subtracting position of "men without glasses" + "women without glasses" (in latent space), you get new points which generates output images of women with glasses:

For instance, you can choose to move in only one of the dimensions of your latent space, say, age [11]

Anime-GAN

As FluffyFreak posted a blog-url on IRC a while ago: there is a webpage that uses the GAN-method to generate anime characters, which is the outcome of a paper by Jin et al (2017) [12]. They train the network on 31255 images with 128x128 resolution (bigger resolution is more challenging), with different labels. Since some labels are more rare than others (common: blue eyes, blond, open mouth; uncommon: glasses, drill hair) the GAN is not equally good at generating characters under the constraint that it should have, say, glasses. However, the authors note that some rare features have been learned well, e.g. like orange eyes, as it appears the network can generalize hair and eye colour easily even if their occurrence in the training set is very low.

Example of generated random images jpg.

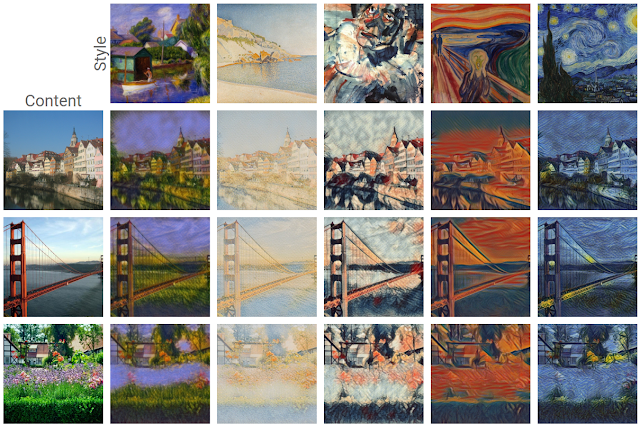

Style transfer - The way for pioneer(?)

Now for the grand finale: style transfer! This allows you to separate out the content of an image (e.g. an elephant, a face, the golden gate, etc.) from the style of a specific art/style you like (e.g. what makes a painting look like Monet, Munch, HR Giger, or like a comic-book/sketch) [13]; or change a horse to a zebra (see images in: pix2pix). There's a popular phone-app that does this, prisma (and painnt), on a pre-existing networks for e.g. Edvard Munch's "The Scream". The results can be very impressive, some examples below, showing content image on the left most column, and style images on the top row:

Taken from blog, another example jpg

New style-mappings can be generated using code on github for fast-neural-style transform (I've played around with this repo a bit). The idea for this section was that I'd here show the result of some style transfer, i.e. take pioneer face gen, which have good content (faces) but ugly style and convert them to e.g. sketch style or similar. Maybe even apply "nozmajner style" (or Evarchart style) to face gen content. Well, it's been a year since I actually wrote 95% of this post, so I better just post what I have now (nothing concrete, but only ideas) and then fill up later. But here's a 4 min video showing style transfer in action: youtube.

The later, 2017 paper, "Deep Photo Style Transfer" [14] shows better generalization and gives photo realistic output, rather than "painting like" as previous. I recommend looking at the pictures of the github implementation, seriously impressive stuff.

Below image shows two very impressive examples (row 1 & 2), where they apply the style of one face (e.g. old/young, for column 1 and 2, respectively) to the identity (person) of the other, i.e. the person must still look the same, so you can apply the "old" style to Clinton's face, and it still looks like a photo realistic image of Clinton [15], or apply Clinton's youth to the old face to get the younger version of the old woman (column 3&4):

For future reference, this is a good and exhausting resource on style transfers. For those wanting more, these (all with nice pictures), I suggest:1 2 3.

Furthermore, there are networks that take a crudely drawn image / sketch, and transfer it to a photo, as shown in this video (for more: neural doodle, Neural Photo Editor blog). See low resolution example below [from the former github link]:

TL-GAN (update 2018)

I wrote this text in October 2017, but now, before posting it, there's a new advancement, introducing "Transparent Latent-space GAN" (TL-GAN), that I believe shows the direction forward for a pioneer ML facegen. Below is a quote from the author's blog post:

So the idea here is not only that all points in latent space should map to a believable face, but each dimension of latent space should have "interpretable meaning" for us humans. e.g. one dimension for sad-happy face, one for old-young face, one dimension for amount of beard, etc. This will allow you to generate random faces with a set of defined features (e.g. one eyed space pirate).TL-GAN wrote:Content creation: Imagine if an advertisement company could automatically generate attractive product images that match the content and style of the webpage where these images are inserted; [or] a new game could allow players to create realistic avatars based simple descriptions.

Note how you can move on the sex-axis, going from woman (to Tom Cruise?) to man

The code takes a random 512-dimensional vector of noise, i.e. random numbers specifying a point in 512D latent space, as input and generates a 1024x1024px image. (Model is trained on the CelebA dataset, consisting of 30k face images).

The result can be seen in this youtube (30 seconds), or gif

He's model is available on github and he accepts PRs, so it will continue to improve.

In closing

If still not overwhelmed, I'd recommend a fast browsing through this 30 amazing applications of deep learning and look at the pictures.

For those wanting to understand ML from the basics / beginners, I can recommend this series: "Worlds easiest introduction to machine learning", also, if wanting to understand facebook computer vision algorithms this is good.

There are other neural network / machine learning algorithm applications in game design, e.g Neural Network Ambient Occlusion and Subdivision Modelling.

Also, I really should have covered InfoGANs [16].

References

[1]

"Progressive Growing of GANs for Improved Quality, Stability, and Variation"

https://arxiv.org/abs/1710.10196

[2]

"ImageNet Classification with Deep Convolutional Neural Networks", (2012)

http://vision.stanford.edu/teaching/cs2 ... unghee.pdf

[3]

"How Convolutional Neural Networks work"

https://www.youtube.com/watch?v=FmpDIaiMIeA

[4]

https://adeshpande3.github.io/adeshpand ... -Networks/

https://adeshpande3.github.io/adeshpand ... ks-Part-2/

[5]

"Generative Adversarial Networks",

https://arxiv.org/abs/1406.2661

[6]

https://en.wikipedia.org/wiki/Lenna

[7]

"Generative Adversarial Text to Image Synthesis"

https://arxiv.org/abs/1605.05396

"Learning Deep Representations of Fine-grained Visual Descriptions"

https://arxiv.org/abs/1605.05395

https://adeshpande3.github.io/adeshpand ... arial-Nets

[8]

"Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network" (2017)

https://arxiv.org/abs/1609.04802

[9]

"Deep predictive coding networks for video prediction and unsupervised learning"

https://arxiv.org/abs/1605.08104

[10]

"Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks"

https://arxiv.org/abs/1511.06434

[11]

https://medium.com/@ageitgey/abusing-ge ... 5d9b96cee7

[12]

"Towards the Automatic Anime Characters Creation with Generative Adversarial Networks"

https://arxiv.org/abs/1708.05509

[13]

"A Neural Algorithm of Artistic Style" https://arxiv.org/abs/1508.06576

[14]

"Deep Photo Style Transfer"

https://arxiv.org/abs/1703.07511

[15]

"Exploring the structure of a real-time, arbitrary neural artistic stylization network"

https://arxiv.org/abs/1705.06830

[16]

https://arxiv.org/abs/1606.03657